Interactive code examples for fun and profit

I believe that most of technical writing — from product documentation to online courses to blog posts — benefits from code examples. Especially when those examples are interactive, meaning the reader can run/modify them without leaving the docs or installing anything.

Let me prove my point by showing you some scenarios involving interactive code snippets. I'll also show how to implement such scenarios using an open source tool I've developed for this very purpose.

Tutorials • Release notes • Reference documentation • Blogging • Education • Implementation

Tutorials and how-to guides

📝 Use case: You are the developer of the Deta Base service, which provides a hassle-free cloud storage for non-critical data. You'd like to teach developers how to use your API.

Deta Base is a simple cloud NoSQL database. It stores arbitrary items, identified by keys, and groups them into collections. To use Deta Base, create a new collection and issue an API key (I will use a demo collection and key in this tutorial).

Suppose we store chat history in the messages collection, where each item is a single message. Let's add some items with a PUT /items request:

PUT https://database.deta.sh/v1/c0p1npjnpwx/messages/items

content-type: application/json

x-api-key: c0p1npjnpwx_uL7C9wfpyoaNVh5Sr3s2FiubG2jrjMyt

{

"items": [

{

"key": "m-001",

"user": "Alice",

"message": "Hi Bob!"

},

{

"key": "m-002",

"user": "Bob",

"message": "Hello there!"

}

]

}

HTTP/1.1 207 Multi-Status

content-length: 131

content-type: application/json; charset=UTF-8

{"processed":{"items":[{"key":"m-001","message":"Hi Bob!","user":"Alice"},{"key":"m-002","message":"Hello there!","user":"Bob"}]}}

PUT creates new items or updates existing ones (judging by the key property values). It returns an HTTP status 207 Multi-Status (quite unusual for HTTP APIs) indicating that the request was successful, and the list of processed items in the request body.

Let's make sure the messages have actually been saved by retrieving them with a GET /items/{key} request:

GET https://database.deta.sh/v1/c0p1npjnpwx/messages/items/m-001

content-type: application/json

x-api-key: c0p1npjnpwx_uL7C9wfpyoaNVh5Sr3s2FiubG2jrjMyt

HTTP/1.1 200 OK

content-length: 51

content-type: application/json; charset=UTF-8

{"key":"m-001","message":"Hi Bob!","user":"Alice"}

Yep, it's a message from Alice. Try changing the key in the URL to m-002 to get Bob's reply.

The tutorial could go on, but I think you get the idea (if you'd like a more complete example, see the API tutorials beyond OpenAPI post). Let's move on to the next use case.

Release notes

📝 Use case: You are the developer of the SQLite database engine. You'd like to show some nice features and improvements you've added in the new 3.44 release.

One of the major 3.44 changes is that aggregate functions now accept an optional order by after the last parameter. It defines an order in which the function processes its arguments.

order by in aggregates is probably not very useful in avg, count and other functions whose result does not depend on the order of the arguments. However, it can be quite handy for functions like group_concat and json_group_array.

Let's say we have an employees table:

select * from employees;

┌────┬───────┬────────┬────────────┬────────┐

│ id │ name │ city │ department │ salary │

├────┼───────┼────────┼────────────┼────────┤

│ 11 │ Diane │ London │ hr │ 70 │

│ 12 │ Bob │ London │ hr │ 78 │

│ 21 │ Emma │ London │ it │ 84 │

│ 22 │ Grace │ Berlin │ it │ 90 │

│ 23 │ Henry │ London │ it │ 104 │

│ 24 │ Irene │ Berlin │ it │ 104 │

│ 31 │ Cindy │ Berlin │ sales │ 96 │

│ 32 │ Dave │ London │ sales │ 96 │

└────┴───────┴────────┴────────────┴────────┘

Suppose we want to show employees who work in each of the departments, so we group by department and use group_concat on the name. Previously, we had to rely on the default record order. But now we can concatenate the employees in straight or reverse alphabetical order (or any other order — try changing the order by clause below):

select

department,

group_concat(name order by name desc) as names

from employees

group by department;

┌────────────┬────────────────────────┐

│ department │ names │

├────────────┼────────────────────────┤

│ hr │ Diane,Bob │

│ it │ Irene,Henry,Grace,Emma │

│ sales │ Dave,Cindy │

└────────────┴────────────────────────┘

Now let's group employees by city using json_group_array:

select

value ->> 'city' as city,

json_group_array(

value -> 'name' order by value ->> 'id'

) as json_names

from

json_each(readfile('src/employees.json'))

group by

value ->> 'city'

┌────────┬───────────────────────────────────────────────────────────────┐

│ city │ json_names │

├────────┼───────────────────────────────────────────────────────────────┤

│ Berlin │ ["\"Grace\"","\"Irene\"","\"Frank\"","\"Cindy\"","\"Alice\""] │

│ London │ ["\"Diane\"","\"Bob\"","\"Emma\"","\"Henry\"","\"Dave\""] │

└────────┴───────────────────────────────────────────────────────────────┘

Nice!

SQLite 3.44 brought more features, but again, I think you get the idea. Let's move on to the next use case.

Reference documentation

📝 Use case: You are the author of the curl tool. You decide to include playgrounds in the product man page so that people do not have to go back to their terminals to try different command line options.

--json <data>

Sends the specified JSON data in a POST request to the HTTP server. --json works as a shortcut for passing on these three options:

--data [arg]

--header "Content-Type: application/json"

--header "Accept: application/json"

If this option is used more than once on the same command line, the additional data pieces are concatenated to the previous before sending.

If you start the data with the letter @, the rest should be a file name to read the data from, or a single dash (-) if you want curl to read the data from stdin. Posting data from a file named 'foobar' would thus be done with --json @foobar and to instead read the data from stdin, use --json @-.

The headers this option sets can be overridden with -H, --header as usual.

Examples:

curl --json '{ "drink": "coffee" }' http://httpbingo.org/anything

{

"args": {},

"headers": {},

"method": "POST",

"origin": "172.19.0.9:39794",

"url": "http://httpbingo.org/anything",

"data": "{ \"drink\": \"coffee\" }",

"files": {},

"form": {},

"json": {

"drink": "coffee"

}

}

curl --json '[' --json '1,2,3' --json ']' http://httpbingo.org/anything

{

"args": {},

"headers": {},

"method": "POST",

"origin": "172.19.0.9:34086",

"url": "http://httpbingo.org/anything",

"data": "[1,2,3]",

"files": {},

"form": {},

"json": [

1,

2,

3

]

}

curl --json @coffee.json http://httpbingo.org/anything

{

"args": {},

"headers": {},

"method": "POST",

"origin": "172.19.0.9:35124",

"url": "http://httpbingo.org/anything",

"data": "{ \"drink\": \"coffee\" }\n",

"files": {},

"form": {},

"json": {

"drink": "coffee"

}

}

If you want to try more curl examples, see the Mastering curl interactive guide. Now let's move on to the next use case.

Blogging

📝 Use case: you are sharing your experience of comparing different object implementations in Python to make them use as little memory as possible.

Imagine you have a simple Pet object with the name (string) and price (integer) attributes. Intuitively, it seems that the most compact representation is a tuple:

("Frank the Pigeon", 50000)

Let's measure how much memory this beauty eats:

import random

import string

from pympler.asizeof import asizeof

def fields():

name_gen = (random.choice(string.ascii_uppercase) for _ in range(10))

name = "".join(name_gen)

price = random.randint(10000, 99999)

return (name, price)

def measure(name, fn, n=10_000):

pets = [fn() for _ in range(n)]

size = round(asizeof(pets) / n)

print(f"Pet size ({name}) = {size} bytes")

return size

baseline = measure("tuple", fields)

Pet size (tuple) = 153 bytes

153 bytes. Let's use that as a baseline for further comparison.

Since nobody works with tuples these days, you would probably choose a dataclass:

from dataclasses import dataclass

@dataclass

class PetData:

name: str

price: int

fn = lambda: PetData(*fields())

base = measure("baseline", fields)

measure("dataclass", fn, baseline=base)

Pet size (baseline) = 161 bytes

Pet size (dataclass) = 249 bytes

x1.55 to baseline

Thing is, it's 1.6 times larger than a tuple.

Let's try a named tuple then:

from typing import NamedTuple

class PetTuple(NamedTuple):

name: str

price: int

fn = lambda: PetTuple(*fields())

base = measure("baseline", fields)

measure("named tuple", fn, baseline=base)

Pet size (baseline) = 161 bytes

Pet size (named tuple) = 161 bytes

x1.00 to baseline

Looks like a dataclass, works like a tuple. Perfect. Or not?

If you are into Python, see further comparison in the Compact objects in Python post. Better yet, save that for later and let's move on to the next use case.

Education

📝 Use case: You are teaching a "Modern SQL" course. In one of the lessons, you explain the standard way of limiting the result rows of a SELECT query.

You are probably familiar with the limit clause, which returns the first N rows according to the order by:

select * from employees

order by salary desc

limit 5;

┌────┬───────┬────────┬────────────┬────────┐

│ id │ name │ city │ department │ salary │

├────┼───────┼────────┼────────────┼────────┤

│ 23 │ Henry │ London │ it │ 104 │

│ 24 │ Irene │ Berlin │ it │ 104 │

│ 32 │ Dave │ London │ sales │ 96 │

│ 31 │ Cindy │ Berlin │ sales │ 96 │

│ 22 │ Grace │ Berlin │ it │ 90 │

└────┴───────┴────────┴────────────┴────────┘

But although limit is widely supported, it's not part of the SQL standard. The standard (as of SQL:2008) dictates that we use fetch:

select * from employees

order by salary desc

fetch first 5 rows only;

┌────┬───────┬────────┬────────────┬────────┐

│ id │ name │ city │ department │ salary │

├────┼───────┼────────┼────────────┼────────┤

│ 23 │ Henry │ London │ it │ 104 │

│ 24 │ Irene │ Berlin │ it │ 104 │

│ 32 │ Dave │ London │ sales │ 96 │

│ 31 │ Cindy │ Berlin │ sales │ 96 │

│ 22 │ Grace │ Berlin │ it │ 90 │

└────┴───────┴────────┴────────────┴────────┘

fetch first N rows only does exactly what limit N does (but it can do more).

fetch plays well with offset, as does limit. Try writing a query that fetches the next 2 records after skipping the first 5:

select id from employees

order by city, id

offset 5 fetch ...;

If you want to learn more about FETCH, see the LIMIT vs. FETCH in SQL post. In the meantime, let's move on to the implementation.

Implementation

As I mentioned earlier, I believe that most of technical writing benefits from interactive examples. So I've built and open-sourced Codapi — a platform for embedding interactive code snippets virtually anywhere.

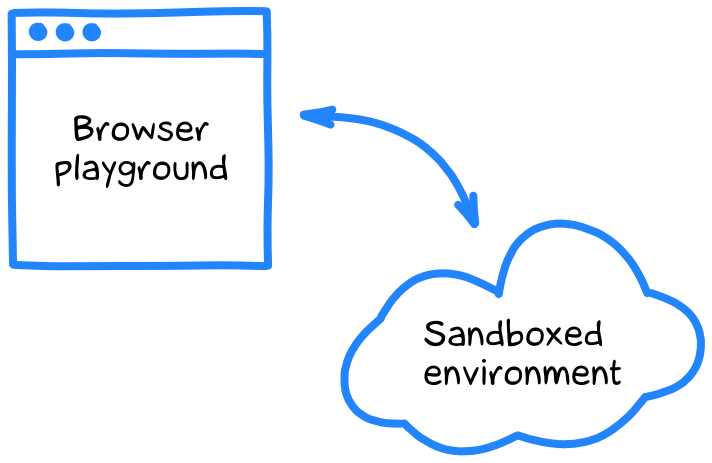

Codapi manages sandboxes (isolated execution environments) and provides an API to execute code in these sandboxes. It also provides a JavaScript widget for easier integration.

Interactive code snippets

On the frontend, Codapi is very non-invasive. Given you already have static code examples in your docs, all you need to do is to add a codapi-snippet for each code sample like this:

<!-- existing code snippet -->

<pre>

def greet(name):

print(f"Hello, {name}!")

greet("World")

</pre>

<!-- codapi widget -->

<codapi-snippet sandbox="python" editor="basic"> </codapi-snippet>

The widget attaches itself to the preceding code block, allowing the reader to run and edit the code like this:

def greet(name):

print(f"Hello, {name}!")

greet("World")

The widget offers a range of customization options and advanced features such as templates and multi-file playgrounds. There are also integration guides for popular platforms such as WordPress, Notion or Docusaurus.

Sandboxed environments

Some playgrounds (namely JavaScript, Python, PHP, Ruby, Lua, SQLite and PostgreSQL) work entirely in the browser using WebAssembly. Others require a sandbox server.

On the backend, a Codapi sandbox is a thin layer over one or more Docker containers, accompanied by a config file that describes which commands to run in those containers.

┌──────────────────────────┐ ┌────────┐

│ execution + API │ → │ config │

└──────────────────────────┘ └────────┘

┌────────┐ ┌──────┐ ┌───────┐ ┌──────┐

│ python │ │ rust │ │ mysql │ │ curl │ ...

└────────┘ └──────┘ └───────┘ └──────┘

╰ containers

For example, here is a config for the run command in the python sandbox:

{

"engine": "docker",

"entry": "main.py",

"steps": [

{

"box": "python",

"command": ["python", "main.py"]

}

]

}

This is essentially what it says:

When the client executes the

runcommand in thepythonsandbox, save their code to themain.pyfile, then run it in thepythonbox (Docker container) using thepython main.pyshell command.

And here is a (slightly more complicated) config for the mysql sandbox:

{

"engine": "docker",

"before": {

"box": "mysql",

"user": "root",

"action": "exec",

"command": ["sh", "/etc/mysql/database-create.sh", ":name"]

},

"steps": [

{

"box": "mysql-client",

"stdin": true,

"command": [

"mysql",

"--host",

"mysql",

"--user",

":name",

"--database",

":name",

"--table"

]

}

],

"after": {

"box": "mysql",

"user": "root",

"action": "exec",

"command": ["sh", "/etc/mysql/database-drop.sh", ":name"]

}

}

As you can see, it involves two containers (MySQL server and client) and additional setup/teardown steps to create and destroy the database.

Thanks to Dockerfiles and declarative sandbox configs, Codapi is not limited to a fixed set of programming languages, databases and tools. You can build your own sandboxes with custom packages and software, and use them for fun and profit.

Using Codapi

Codapi is available as a cloud service and as a self-hosted version. It is licensed under the permissive Apache-2.0 license and is committed to remaining open source forever.

Here is how to get started with the self-hosted version:

If you prefer the cloud version, join the beta.

In any case, I encourage you to give Codapi a try. Let's make the world of technical documentation a little better!

★ Subscribe to keep up with new posts.